Saturday, December 22, 2007

The power of checklists (especially when automated)

I'd like to take this approach up a notch though: if you're in the software business, you actually need to AUTOMATE your checklists. Otherwise it's still very easy for a human being to skip a step. Scripts don't usually make that mistake. Yes, a human being still needs to run the script and to make intelligent decisions about the overall outcome of its execution. If you do take this approach, make sure your scripts also have checks and balances embedded in them -- also known as tests. For example, if your script retrieves a file over the network with wget, make sure the file actually gets on your local file system. A simple 'ls' of the file will convince you that the operation succeeded.

As somebody else once said, the goal here is to replace you (the sysadmin or the developer) with a small script. That will free you up to do more fun work.

Wednesday, December 05, 2007

GHOP students ROCK!

This bodes very well for Open Source in general, and for the Python community in particular. I hope that the students will continue to contribute to existing Python projects and start their own.

Here are some examples from tasks that I've been involved with:

- Michael Kremer profiled effbot's widefinder implementations and discussed the results superbly

- Eren Turkay wrote unit tests for the pydigg module, obtaining almost 90% code coverage; he's currently tackling a 2nd task, writing unit tests for SimpleXMLRPC

- iammisc (not sure what the real name is) is writing an API for interacting with MySpace

If you want to witness all this for yourself, and maybe get some help for your project from some really smart students, send an email with your proposal for tasks to the GHOP discussion list.

Thursday, November 29, 2007

Interview with Jerry Weinberg at Citerus

"Q: If you're the J.K Rowling of software development, who's Harry P then?

A: Well, first of all, I'm not a billionaire, so it's probably not correct to say I'm the J.K. Rowling of software development. But if I were, I suspect my Harry Potter would be a test manager, expected to do magic but discounted by software developers because "he's only a tester." As for Voldemort, I think he's any project manager who can't say "no" or hear what Harry is telling him."

Testers are finally redeemed :-)

Vonnegut's last interview

Monday, November 12, 2007

PyCon'08 Testing Tutorial proposal

Friday, October 19, 2007

Pybots updates

I also had to disable the tests for bzr dev on my RH 9 buildslave, because for some reason they were leaving a lot of orphaned/zombie processes around.

With help from Jean-Paul Calderone from the Twisted team, we managed to get the Twisted buildslave (running RH 9) past some annoying multicast-related failures. Jean-Paul had me add an explicit iptables rule to allow multicast traffic. The rule is:

iptables -A INPUT -j ACCEPT -d 225.0.0.0/24

This seemed to have done the trick. There are some Twisted unit tests that still fail -- some of them are apparently due to the fact that raising string exceptions is now illegal in the Python trunk (2.6). Jean-Paul will investigate and I'll report on the findings -- after all, this type of issues is exactly why we set up the Pybots farm in the first place.

As usual, I end with a plea to people interested in running Pybots buidlslaves to either send a message to the mailing list, or contact me directly at grig at gheorghiu dot net.

Compiling mod_python on RHEL 64 bit

I first tried this:

# tar xvfz mod_python-3.3.1.tar.gz

# cd mod_python-3.3.1

# ./configure --with-apxs==/usr/sbin/apxs --with-python=/usr/local/bin/python2.5

# make

...at which point I got this ugly error:

/usr/lib64/apr-1/build/libtool --silent --mode=link gcc -o mod_python.la \

-rpath /usr/lib64/httpd/modules -module -avoid-version finfoobject.lo \

hlistobject.lo hlist.lo filterobject.lo connobject.lo serverobject.lo util.lo \

tableobject.lo requestobject.lo _apachemodule.lo mod_python.lo\

-L/usr/local/lib/python2.5/config -Xlinker -export-dynamic -lm\

-lpython2.5 -lpthread -ldl -lutil -lm

/usr/bin/ld: /usr/local/lib/python2.5/config/libpython2.5.a(abstract.o):

relocation R_X86_64_32 against `a local symbol' can not be used when making a shared object;

recompile with -fPIC

/usr/local/lib/python2.5/config/libpython2.5.a: could not read symbols: Bad value

collect2: ld returned 1 exit status

apxs:Error: Command failed with rc=65536

I googled around for a bit, and I found this answer courtesy of Martin von Loewis. To quote:

It complains that some object file of Python wasn't compiled

with -fPIC (position-independent code). This is a problem only if

a) you are linking a static library into a shared one (mod_python, in this case), and

b) the object files in the static library weren't compiled with -fPIC, and

c) the system doesn't support position-dependent code in a shared library

As you may have guessed by now, it is really c) which I

blame. On all other modern systems, linking non-PIC objects

into a shared library is supported (albeit sometimes with a

performance loss on startup).

So your options are

a) don't build a static libpython, instead, build Python

with --enable-shared. This will give you libpython24.so

which can then be linked "into" mod_python

b) manually add -fPIC to the list of compiler options when

building Python, by editing the Makefile after configure has run

c) find a way to overcome the platform limitation. E.g. on

Solaris, the linker supports an impure-text option which

instructs it to accept relocations in a shared library.

You might wish that the Python build process supported

option b), i.e. automatically adds -fPIC on Linux/AMD64.

IMO, this would be a bad choice, since -fPIC itself usually

causes a performance loss, and isn't needed when we link

libpython24.a into the interpreter (which is an executable,

not a shared library).

Therefore, I'll close this as "won't fix", and recommend to

go with solution a).

So I proceeded to reconfigure Python 2.5 via './configure --enable-shared', then the usual 'make; make install'. However, I hit another snag right away when trying to run the new python2.5 binary:

# /usr/local/bin/python

python: error while loading shared libraries: libpython2.5.so.1.0: cannot open shared object file: No such file or directory

I remembered from other issues I had similar to this that I have to include the path to libpython2.5.so.1.0 (which is /usr/local/lib) in a ldconfig configuration file.

I created /etc/ld.so.conf.d/python2.5.conf with the contents '/usr/local/lib' and I ran

# ldconfig

At this point, I was able to run the python2.5 binary successfully.

I then re-configured and compiled mod_python with

# ./configure --with-apxs=/usr/sbin/apxs --with-python=/usr/local/bin/python2.5

# make

Finally, I copied mod_python.so from mod_python-3.3.1/src/.libs to /etc/httpd/modules and restarted Apache.

Not a lot of fun, that's all I can say.

Update 10/23/07

To actually use mod_python, I had to also copy the directory mod_python-3.3.1/lib/python/mod_python to /usr/local/lib/python2.5/site-packages. Otherwise I would get lines like these in the apache error_log when trying to hit a mod_python-enabled location:

[Mon Oct 22 19:41:20 2007] [error] make_obcallback: \

could not import mod_python.apache.\n \

ImportError: No module named mod_python.apache

[Mon Oct 22 19:41:20 2007] [error] make_obcallback:

Python path being used \

"['/usr/local/lib/python2.5/site-packages/setuptools-0.6c6-py2.5.egg', \

'/usr/local/lib/python25.zip', '/usr/local/lib/python2.5', \

'/usr/local/lib/python2.5/plat-linux2', \

'/usr/local/lib/python2.5/lib-tk', \

'/usr/local/lib/python2.5/lib-dynload', '/usr/local/lib/python2.5/site-packages']".

[Mon Oct 22 19:41:20 2007] [error] get_interpreter: no interpreter callback found.

Update 01/29/08

I owe Graham Dumpleton (the creator of mod_python and mod_wsgi) an update to this post. As he added in the comments, instead of manually copying directories around, I could have simply said:

make install

and the installation would have properly updated the site-packages directory under the correct version of python (2.5 in my case) -- this is because I specified that version in the --with-python option of ./configure.

Another option for the installation, if you want to avoid copying the mod_python.so file in the Apache modules directory, and only want to copy the Python files in the site-packages directory, is:

make install_py_lib

Update 06/18/10

From Will Kessler:

"You might also want to add a little note though. The error message may actually be telling you that Python itself was not built with --enable-shared. To get mod_python-3.3.1 working you need to build Python with -fPIC (use enable-shared) as well."

Thursday, October 04, 2007

What's more important: TDD or acceptance testing?

As I said before, holistic testing is the way to go.

Wednesday, September 26, 2007

Roy Osherove book on "The art of unit testing"

Wednesday, September 19, 2007

Beware of timings in your tests

The problem was that some file transfers were failing in a mysterious way. We obviously looked at the network connectivity between the user reporting the problem initially and our data center, then we looked at the size of the files he was trying to transfer (he thought files over 10 MB were the culprit). We also looked at the number of files transferred, both multiple files in one operation and single files in consecutive operations. We tried transferring files using both a normal FTP client, and the java applet. Everything seemed to point in the direction of 'works for me' -- a stance well-known to testers around the world. All of a sudden, around an hour after I started using the java applet to transfer files, I got the error 'unable to upload one or more files', followed by the message 'software caused connection abort: software write error'. I thought OK, this may be due to web sessions timing out after an hour. I did some more testing, and the second time I got the error after half an hour. I also noticed that I let some time pass between transfers. This gave me the idea of investigating timeout setting on the FTP server side (which was running vsftpd). And lo and behold, here's what I found in the man page for vsftpd.conf:

idle_session_timeout

The timeout, in seconds, which is the maximum time a remote client may spend between FTP commands. If the timeout triggers, the remote client is kicked off.

Default: 300

My next step was of course to wait 5 minutes between file transfers, and sure enough, I got the 'unable to upload one or more files' error.

Lesson learned: pay close attention to the timing of your tests. Also look for timeout settings both on the client and on the server side, and write corner test cases accordingly.

In the end, it was by luck that I discovered the cause of the problems we had, but as Louis Pasteur said, "Chance favors the prepared mind". I'll surely be better prepared next time, timing-wise.

Thursday, September 13, 2007

Barack Obama is now a connection

The bottom line is that YOU TOO can have Barack as your connection, if only to brag to your friends about it.

Wednesday, September 12, 2007

Thursday, September 06, 2007

Security testing book review on Dr. Dobbs site

Wednesday, September 05, 2007

Weinberg on Agile

Tuesday, September 04, 2007

Jakob Nielsen on fancy formatting and fancy words

Friday, August 24, 2007

Put your Noonhat on

This has potential not only for single people trying to find a date, but also for anybody who's unafraid of stepping out of their comfort zone and strike interesting conversations over lunch. Brian and his site have already been featured on a variety of blogs and even in mainstream media around Seattle. Check out the Noonhat blog for more details.

Well done, Brian, and may your site prosper (of course, the ultimate in prosperity is being bought by Google :-)

Tuesday, August 21, 2007

Fuzzing in Python

Update: got through the first 5-6 chapters of the book. Highly entertaining and educational so far.

Wednesday, August 08, 2007

Werner Vogels talk at QCon

Vogels pretty much equated partitioning with failure. Failure is inevitable, so you have to choose it out of those 3 properties. You're left with a choice between consistency and availability, or between ACID and BASE. According to Vogels, it turns out there's also a middle-of-the-road approach, where you choose a specific approach based on the needs of a particular service. He gave the example of the checkout process on amazon.com. When customers want to add items to their shopping cart, you ALWAYS want to honor that request (obviously because that's $$$ in the bank for you). So you choose high availability, and you hide errors from the customers in the hope that the system will sort out the errors at a later stage. When the customer hits the 'Submit order' button, you want high consistency for the next phase, because several sub-systems access that data at the same time (credit card processing, shipping and handling, reporting, etc.).

I also liked the approach Amazon takes when splitting people into teams. They have the 2-pizza rule: if it takes more than 2 pizzas to feed a team, it means the team is too large and needs to be split up. This equates to about 8 people per team. They actually make architectural decisions based on team size. If a feature is deemed to large to be comprehended by a team of 8 people, they split the feature into smaller pieces that can be digested more easily. Very agile approach :-)

Anyway, good presentation, highly recommended.

Tuesday, August 07, 2007

Automating tasks with pexpect

A couple of caveats I discovered so far:

- make sure you specify correctly the text you expect back; even an extra space can be costly, and make your script wait forever; you can add '.*' to the beginning or to the end of the text you're expecting to make sure you're catching unexpected characters

- if you want to print the output from the other side of the connection, use child.before (where child is the process spawned by pexpect)

#!/usr/bin/env python

import pexpect

def show_virtual(child, virtual):

child.sendline ('show server virtual %s' % virtual)

child.expect('SSH@MyLoadBalancer>')

print child.before

def show_real(child, real):

child.sendline ('show server real %s' % real)

child.expect('SSH@MyLoadBalancer>')

print child.before

virtuals = ['www.mysite.com']

reals = ['web01', 'web02']

child = pexpect.spawn ('ssh myadmin@myloadbalancer')

child.expect ('.* password:')

child.sendline ('mypassword')

child.expect ('SSH@MyLoadBalancer>')

for virtual in virtuals:

show_virtual(child, virtual)

for real in reals:

show_real(child, real)

child.sendline ('exit')

Think twice before working from a Starbucks

Pretty scary, and it makes me think twice before firing up my laptop in a public wireless hotspot. The people who wrote Hamster, from Errata Security, already released another tool called Ferret, which intercepts juicy bits of information -- they call it 'information seepage'. You can see a presentation on Ferret here. They're supposed to release Hamster into the wild any day now.

Update: If the above wasn't enough to scare you, here's another set of wireless hacking tools called Karma (see the presentation appropriately called "All your layers are belong to us".)

Thursday, August 02, 2007

That's what I call system testing

It's also interesting that the article mentions insiders as a security threat -- namely, that insiders will try to print their own accreditation badges, or do it for their friends, etc. As always, the human factor is the hardest to deal with. They say they resort to extensive background checks for the 2,500 or so IT volunteers, but I somehow doubt that will be enough.

Tuesday, July 31, 2007

For your summer reading list: book on Continuous Integration

Monday, July 30, 2007

Notes from the SoCal Piggies meeting

Saturday, July 28, 2007

Dilbert, the PHB, and automated tests

Friday, July 27, 2007

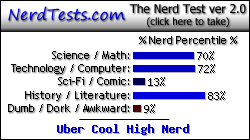

Your purpose is the Python group

How did it guess??? I used to not be a big believer in AI, but now I'm sold.

Pybots updates

Speaking of test-infected projects, it was nice to see unit testing topping the list of topics in the Django tutorial given at OSCON by Jeremy Dunck, Jacob Kaplan-Moss and Simon Willison. In fact, Titus is quoted too on this slide, which seems to be destined to be a classic (and I'm proud he uttered those words during the Testing Tools Panel that I moderated at PyCon07). Way to go, Titus, but I'd really like to see some T-shirts sporting that quote :-)

Saturday, July 07, 2007

Another Django success story

Thursday, June 21, 2007

Interested in a book on automated Web app testing?

Wednesday, June 06, 2007

Brian Marick on "Four implementation styles for workflow tests"

Friday, May 25, 2007

Consulting opportunities

Wednesday, May 16, 2007

Tuesday, May 15, 2007

Eliminating dependencies with regenerative build tools

I bet this idea could be easily applied to Python projects, where you would comment out import statements and see if your unit test suite still passes. Of course, you can combine it with snakefood, a very interesting dependency graphing tool just released by Martin Blais. And you can also combine it with fault injection tools (aka fuzzers) such as Pester -- which belongs to the Jester family of tools also mentioned by Michael Feathers in his blog post.

Monday, May 14, 2007

Brian Marick has a new blog

" This is the another step in my multi-decade switch from testing.com to exampler.com. "Exampling", though not a verb, is a better description of what I do now. It includes testing, but has a larger scope."

And by the way, the style of technical writing that Brian describes in his latest post bugs me no end too...

Thursday, May 10, 2007

Resetting MySQL account passwords

1) Stop mysqld, for example via 'sudo /etc/init.d/mysqld stop'

2) Create text file /tmp/mysql-init with the following contents (note that the file needs to be in a location that is readable by user mysql, since it will be read by the mysqld process running as that user):

SET PASSWORD FOR 'root'@'localhost' = PASSWORD('newpassword');

3) Start mysqld_safe with following option, which will set the password to whatever was specified in /tmp/mysql-init:

$ sudo /usr/bin/mysqld_safe --init-file=/tmp/mysql-init &

4) Test connection to mysqld:

$ sudo mysql -uroot -pnewpassword

5) If connection is OK, restart mysqld server:

$ sudo /etc/init.d/mysqld restart

Also for future reference, here's how to reset a normal user account password in MySQL:

Connect to mysqld as root (I assume you know the root password):

$ mysql -uroot -prootpassword

Use the SET PASSWORD command:

mysql> SET PASSWORD for 'myuser'@'localhost' = PASSWORD('newuserpassword');

Wednesday, May 09, 2007

Apache virtual hosting with Tomcat and mod_jk

Let's say you want to configure virtual hosts in Apache, with each virtual host talking to a different Tomcat instance via the mod_jk connector. Each virtual host serves up a separate application via an URL such as http://www.myapp.com. This URL needs to be directly mapped to a Tomcat application. This is a fairly important requirement, because you don't want to go to a URL such as http://www.myapp.com/somedirectory to see your application. This means that your application will need to be running in the ROOT of the Tomcat webapps directory.

You also want Apache to serve up some static content, such as images.

Running multiple instances of Tomcat has a couple of advantages: 1) you can start/stop your Tomcat applications independently of each other, and 2) if a Tomcat instance goes down in flames, it won't take with it the other ones.

Here is a recipe that worked for me. My setup is: CentOS 4.4, Apache 2.2 and Tomcat 5.5, with mod_jk tying Apache and Tomcat together (mod_jk2 has been deprecated).

Scenario: we want www.myapp1.com to go to a Tomcat instance running on port 8080, and www.myapp2.com to go to a Tomcat instance running on port 8081. Apache will serve up www.myapp1.com/images and www.myapp2.com/images.

1) Install Apache and mod_jk. CentOS has the amazingly useful yum utility (similar to apt-get for you Debian/Ubuntu fans), which makes installing packages a snap:

# yum install httpd

# yum install mod_jk-ap20

2) Get the tar.gz for Tomcat 5.5 -- you can download it from the Apache Tomcat download site. The latest 5.5 version as of now is apache-tomcat-5.5.23.tar.gz.

3) Unpack apache-tomcat-5.5.23.tar.gz under /usr/local. Rename apache-tomcat-5.5.23 to tomcat8080. Unpack the tar.gz one more time, rename it to tomcat8081.

4) Change the ports tomcat is listening on for the instance that will run on port 8081.

# cd /usr/local/tomcat8081/conf

- edit server.xml and change following ports:

8005 (shutdown port) -> 8006

8080 (non-SSL HTTP/1.1 connector) -> 8081

8009 (AJP 1.3 connector) -> 8010

There are other ports in server.xml, but I found that just changing the 3 ports above does the trick.

I won't go into the details of getting the 2 Tomcat instances to run. You need to create a tomcat user, make sure you have a Java JDK or JRE installed, etc., etc.

The startup/shutdown scripts for Tomcat are /usr/local/tomcat808X/bin/startup.sh|shutdown.sh.

I will assume that at this point you are able to start up the 2 Tomcat instances. The first one will listen on port 8080 and will have an AJP 1.3 connector (used by mod_jk) listening on port 8009. The second one will listen on port 8081 and will have the AJP 1.3 connector listening on port 8010.

5) Deploy your applications.

Let's say you have war files called app1.war for your first application and app2.war for your second application. As I mentioned in the beginning of this post, your goal is to serve up these applications directly under URLs such as http://www.myapp1.com, as opposed to http://www.myapp1.com/app1. One solution I found for this is to rename app1.war to ROOT.war and put it in /usr/local/tomcat8080/webapps. Same thing with app2.war: rename it to ROOT.war and put it in /usr/local/tomcat8081/webapps.

You may also need to add one line to the Tomcat server.xml file, which is located in /usr/local/tomcat808X/conf. The line in question is the one starting with Context, and you need to add it to the Host section similar to this one in server.xml. I say 'you may also need' because I've seen cases where it worked without it. But better safe than sorry. What the Context element does is it specifies ROOT as the docBase of your Web application (similar if you will to the Apache DocumentRoot directory).

At this point, if you restart the 2 Tomcat instances, you should be able to go to http://www.myapp1.com:8080 and http://www.myapp2.com:8081 and see your 2 Web applications.

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true"

xmlValidation="false" xmlNamespaceAware="false">

<Context path="" docBase="ROOT" debug="0"/>

6) Create Apache virtual hosts for www.myapp1.com and www.myapp2.com and tie them to the 2 Tomcat instances via mod_jk.

Here is the general mod_jk section in httpd.conf -- note that it needs to be OUTSIDE of the virtual host sections:

#

# Mod_jk settings

#

# Load mod_jk module

LoadModule jk_module modules/mod_jk.so

# Where to find workers.properties

JkWorkersFile conf/workers.properties

# Where to put jk logs

JkLogFile logs/mod_jk.log

# Set the jk log level [debug/error/info]

JkLogLevel emerg

# Select the log format

JkLogStampFormat "[%a %b %d %H:%M:%S %Y] "

# JkOptions indicate to send SSL KEY SIZE,

JkOptions +ForwardKeySize +ForwardURICompat -ForwardDirectories

# JkRequestLogFormat set the request format

JkRequestLogFormat "%w %V %T"

Note that the section above has an entry called JkWorkersFile, referring to a file called workers.properties, which I put in /etc/httpd/conf. This file contains information about so-called workers, which correspond to the Tomcat instances we're running on that server. Here are the contents of my workers.properties file:

#The file declares 2 workers that I named app1 and app2. The first worker corresponds to the AJP 1.3 connector running on port 8009 (which is part of the Tomcat instance running on port 8080), and the second worker corresponds to the AJP 1.3 connector running on port 8010 (which is part of the Tomcat instance running on port 8081).

# This file provides minimal jk configuration properties needed to

# connect to Tomcat.

#

# The workers that jk should create and work with

#

workers.tomcat_home=/usr/local/tomcat8080

workers.java_home=/usr/lib/jvm/java

ps=/

worker.list=app1, app2

worker.app1.port=8009

worker.app1.host=localhost

worker.app1.type=ajp13

worker.app1.lbfactor=1

worker.app2.port=8010

worker.app2.host=localhost

worker.app2.type=ajp13

worker.app2.lbfactor=1

The way Apache ties into Tomcat is that each of the VirtualHost sections configured for www.app1.com and www.app2.com declares a specific worker. Here is the VirtualHost section I have in httpd.conf for www.app1.com:

<VirtualHost *:80>

ServerName www.app1.com

DocumentRoot "/usr/local/tomcat8080/webapps/ROOT"

<Directory "usr/local/tomcat8080/webapps/ROOT">

# Options Indexes FollowSymLinks MultiViews

Options None

AllowOverride None

Order allow,deny

allow from all

</Directory>

ErrorLog logs/app1-error.log

CustomLog logs/app1-access.log combined

# Send ROOT app. to worker named app1

JkMount /* app1

JkUnMount /images/* app1

RewriteEngine On

RewriteRule ^/(images/.+);jsessionid=\w+$ /$1

</VirtualHost>

The 2 important lines as far as the Apache/mod_jk/Tomcat configuration is concerned are:

JkMount /* app1

JkUnMount /images/* app1

The line "JkMount /* app1" tells Apache to send everything to the worker app1, which then ties into the Tomcat instance on port 8080.

The line "JkUnMount /images/* app1" tells Apache to handle everything under /images itself -- which was one of our goals.

At this point, you need to restart Apache, for example via 'sudo service httpd restart'. If everything went well, you should be able to go to http://www.myapp1.com and http://www.myapp2.com and see your 2 Web applications running merrily.

You may have noticed a RewriteRule in each of the 2 VirtualHost sections in httpd.conf. What happens with many Java-based Web application is that when a user first visits a page, the application does not know yet if the user has cookies enabled or not, so the application will use a session ID mechanism fondly known as jsessionid. If the user does have cookies enabled, the application will not use jsessionid the second time a page is loaded. If cookies are not enabled, the application (Tomcat in our example) will continue generating URLs such as

http://www.myapp1.com/images/myimage.gif;jsessionid=0E45D13A0815A172BD1DC1D985793D02

In our example, we told Apache to process all URLs that start with 'images'. But those URLs have already been polluted by Tomcat with jsessionid the very first time they were hit. As a result, Apache was trying to process them, and was failing miserably, so images didn't get displayed the first time a user hit a page. If the user refreshed the page, images would get displayed properly (if the user had cookies enabled).

The solution I found for this issue was to use a RewriteRule that would get rid of the jsessionid in every URL that starts with 'images'. This seemed to do the trick.

That's about it. I hope this helps somebody. It's the result of some very intense googling :-)

If you have comments or questions, please leave them here and I'll try to answer them.

Monday, May 07, 2007

JRuby buzz

One thing I found very interesting in the InfoQ article was that ThoughtWorks preferred to develop Mingle with JRuby (which is the JVM-based version of Ruby) over writing it on top of Ruby on Rails. They cite ease of deployment as a factor in favor of JRuby:

"In particular, the deployment story for Ruby on Rails applications is still significantly more complex than it should be. This is fine for a hosted application where the deployment platform is in full control of a single company, but Mingle isn't going to be just hosted. Not only is it going to need to scale ‘up’ to the sizes of Twitter (okay, that's wishful thinking and maybe it won't need to scale that much) but it's also going to need to scale ‘down’ to maybe a simple Windows XP machine with just a gig of RAM. On top of that, it's going to be installed by someone who doesn't understand anything about Ruby on Rails deployment and, well, possibly not much about deployment either."

They continue by saying that their large commercial customers wanted to be able to deploy Mingle by dropping a Java .war file under any of the popular Java application servers.

So, for all the talk about Ruby on Rails and the similarly hot Python frameworks, Java and J2EE are far from dead.

Here's wishing that Jython will start generating the same amount of buzz.

Tuesday, May 01, 2007

Michael Dell uses Ubuntu on his home laptop

Saturday, April 28, 2007

What programming language are *you*?

Which Programming Language are You?

Thursday, April 26, 2007

PyCon07 Testing Tools Tutorial slides up

Mounting local file systems using the 'bind' mount type

Sometimes paths are hardcoded in applications -- let's say you have the path to the Apache DocumentRoot directory hardcoded inside a web application to /home/apache/www.mysite.com. You can't change the code of the web app, but you want to migrate it. You don't want to use the same path on the new server, for reasons of standardization across servers. Let's say you want to set DocumentRoot to /var/www/www.mysite.com.

But /home is NFS-mounted, so that all users can have their home directory kept in one place. One not-so-optimal solution would be to create an apache directory under /home on the NFS server. At that point, you can create a symlink to /var/www/www.mysite.com inside /home/apache. This is suboptimal because the production servers will come to depend on the NFS-mounted directory. You would like to keep things related to your web application local to each server running that application.

A better solution (suggested by my colleague Chris) is to mount a local directory, let's call it /opt/apache_home, as /home/apache. Since the servers are already using automount, this is a question of simply adding this line as the first line in /etc/auto.home:

apache -fstype=bind :/opt/apache_home

/etc/auto.home was already referenced in /etc/auto.master via this line:

/home /etc/auto.home

Note that we're using the neat trick of mounting a local file system via the 'bind' mount type. This can be very handy in situations where symbolic links don't help, because you want to reference a real directory, not a file pointing to a directory. See also this blog post for other details and scenarios where this trick is helpful.

Now all applications that reference /home/apache will actually use /opt/apache_home.

For the specific case of the DocumentRoot scenario above, all we needed to do at this point was to create a symlink inside /opt/apache_home, pointing to the real DocumentRoot of /var/www/www.mysite.com.

Thursday, March 29, 2007

Dell to offer pre-installed Linux on desktops

Wednesday, March 28, 2007

OLPC and the Romanian politicians

Having seen Ivan Krstic's keynote on OLPC at PyCon this year, I realize that the One Laptop Per Child program is mainly about re-introducing kids to their intuitive ways of learning, through play, peer activities and free exploration, as opposed to the centralized, one-to-many teaching method that is used in schools everywhere. The laptop becomes in this case just a tool for facilitating the new ways of learning -- or I should say the old ways, since this is what kids do naturally. But this is one of those disruptive ideas that is hard to grasp by serious grown-up people, especially politicians...

Thursday, March 22, 2007

File sharing with Apache and WebDAV

1) Let's say we want to share files in a directory named /usr/share/myfiles. I created a sub-directory called dav in that directory, and then I ran:

# chmod 775 dav2) Make sure httpd.conf loads the mod_dav modules:

# chgrp apache dav

LoadModule dav_module modules/mod_dav.so3) Create an Apache password file (if you want to use basic authentication) and a user -- let's call it webdav:

LoadModule dav_fs_module modules/mod_dav_fs.so

# htpasswd -c /etc/httpd/conf/.htpasswd webdav

4) Create a virtual host entry in httpd.conf, similar to this one:

<VirtualHost *>

ServerName share.mydomain.com

DocumentRoot "/usr/share/myfiles"

<Directory "/usr/share/myfiles">

Options Indexes FollowSymLinks MultiViews

AllowOverride AuthConfig

Order allow,deny

allow from all

</Directory>

ErrorLog share-error.log

CustomLog share-access.log combined

DavLockDB /tmp/DavLock

<Location /dav>

Dav On

AuthType Basic

AuthName DAV

AuthUserFile /etc/httpd/conf/.htpasswd

Require valid-user

</Location>

</VirtualHost>

5) Restart httpd, verify that if you go to http://share.mydomain.com/dav you are prompted for a user name and password, and that once you get past the security dialog you can see something like 'Index of /dav'.

Now it's time to configure your Windows client to see the shared WebDAV resource. On the Windows client, either:

- go to "My network connections" and add a new connection, or

- go to Windows Explorer->Tools->Map Network Drive, then click on "Signup for online storage or connect to a network server"

- Click Next, then select "Choose another network location", then click Next.

- For "Internet or network address", set http://share.mydomain.com/dav. At this point you'll be prompted for a user name/password; specify the ones you defined above.

- After mapping the resource, you should be able to read/write to it.

Sometimes the Windows dialog asking for a user name and password will say "connecting to share.mydomain.com" and will keep asking you for the user name/password. The dialog is supposed to show the text you set in AuthName (DAV in my case). If it doesn't, click Cancel, then try again. You can also try to force HTTP basic authentication (as opposed to Windows authentication, which is what Windows tries to do) by specifying http://share.mydomain.com:80/dav as the URL. See also this entry on the WebDAV Wikipedia page.

Resources

- Apache2 mod_dav documentation

- Article by Martin Brown on "Enabling WebDAV in Apache"

- Setting up Apache2 and WebDAV on Debian

Wednesday, March 21, 2007

Ubuntu "command not found" magic

First you need to apt-get the command-not-found package:

$ sudo apt-get install command-not-found

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following extra packages will be installed:

command-not-found-data

The following NEW packages will be installed:

command-not-found command-not-found-data

0 upgraded, 2 newly installed, 0 to remove and 15 not upgraded.

Need to get 471kB/475kB of archives.

After unpacking 6263kB of additional disk space will be used.

Do you want to continue [Y/n]? y

Get:1 http://us.archive.ubuntu.com edgy/universe command-not-found-data 0.1.0 [471kB]

Fetched 300kB in 2s (109kB/s)

Selecting previously deselected package command-not-found-data.

(Reading database ... 92284 files and directories currently installed.)

Unpacking command-not-found-data (from .../command-not-found-data_0.1.0_i386.deb) ...

Selecting previously deselected package command-not-found.

Unpacking command-not-found (from .../command-not-found_0.1.0_all.deb) ...

Setting up command-not-found-data (0.1.0) ...

Setting up command-not-found (0.1.0) ...

Then you need to open a new shell window, so that the hook gets installed. In that window, try running some commands which are part of packages that you don't yet have installed. For example:

$ nmap

The program 'nmap' is currently not installed, you can install it by typing:

sudo apt-get install nmap

$ snort

The program 'snort' can be found in the following packages:

* snort-pgsql

* snort-mysql

* snort

Try: sudo apt-get install

Pretty cool, huh. And of course the bash hook is written in Python.

Monday, March 19, 2007

Founder of Debian joins Sun

CheeseRater - voting in the CheeseShop

Sunday, March 18, 2007

Stone soup as a cure for broken windows

Wednesday, March 14, 2007

A few good agile men

"Marketing: "Did you cut the automated, edit sync [insert favorite feature here] feature?"

Development: "I did the job I was hired to do."

Marketing: "Did you cut the automated, edit sync feature?"

Development: "I delivered the release on time."

Marketing: "Did you cut the automated, edit sync feature?"

Development: "You're g%$#@*& right I did!""

Thursday, March 08, 2007

Wednesday, March 07, 2007

Tuesday, February 27, 2007

testing-in-python mailing list

- testing tools that people have successfully used in their projects

- testing techniques that help in certain situations (mocking for example)

- real life scenarios where a specific type of testing (e.g. functional) helped more than another type of testing (e.g. unit)

- etc. etc.

Update 02/28/07

What do you know, the name TIP struck a chord, so Titus created an alias for it. You can now send email to the list via tip at lists.idyll.org too.

William McVey's PyCon notes as mindmap

Also from William, other PyCon07 notes.

Monday, February 26, 2007

Testing Tools Panel at PyCon

- Benji York: zope.testbrowser

- Brian Dorsey: py.test (representing Holger Krekel)

- Chad Whitacre: testosterone (created) switched to nose

- Ian Bicking: paste.test.fixture, minimock, FitLoader

- Jeff Younker: PyMock

- Kumar McMillan: fixture - module for loading and referencing test data

- Martin Taylor: test framework within TI

- Neal Norwitz: PyChecker

- Tim Couper: WATSUP (Windows GUI Testing)

- Titus Brown: twill, scotch, figleaf, pinocchio

Matt Harrison has a very good write-up on the discussions we had during the panel (actually I lifted the list above from his blog post, because he summarized it so well).

One thing that I think all the participants felt, and maybe the audience too, was that 45 minutes was totally not sufficient for this kind of panel. And I know I felt the same thing with the other two panels, for Python-dev and for the Web frameworks. So I'd like to ask people to leave some comments on this post, with ideas about turning these panels into discussions that would last longer, at least 1 hour and even more.

My gut feeling is that there would be a lot of interest in getting framework/library/tool creators together and having a discussion/Q&A with them in front of the audience, with audience participation of course. I'm not sure what the best format would be for this kind of thing -- maybe a round table? But if we get enough ideas, maybe we can fit something like this in next year's PyCon schedule, and allocate it a generous amount of time.

Testability

Although our tutorial was focused on tools and techniques for implementing test automation, we also mentioned that you will never be able to get rid of manual testing. Even though the Google testing team says that 'Life is too short for manual testing' (and I couldn't agree more with them), they hasten to qualify this slogan by adding that automated testing frees you up to do more meaningful exploratory testing.

My experience as a tester shows that the nastiest bugs are often discovered by manual testing. But when you do discover them manually, the best strategy is to write automated tests for them, so that you'll check your application in that particular area from that moment on, via an automated test suite which runs in your continuous integration system.

You do have an automated test suite, right? And it does run periodically (daily or upon on every check-in) in a continuous integration system, right? And you have everything set up so that you're notified by email or RSS feeds when something fails, right? And you fix failures quickly so that everything turns back to green, because you know that too much red, too often, leads to broken windows and bit rot, right?

If you answered No to any of these questions, then you are not testing your application, period (but you already knew this if you took our tutorial -- it was on the last slide :-)

Friday, February 23, 2007

Photos from PyCon panels

PyCon day 1

Just got out of the first keynote, Ivan Krstic's talk on the "One Laptop Per Child" project. Pretty interesting -- here are some tidbits I remember:

- OLPC wants to change the way teaching and learning is done these days; they want to go back to the time when preschool kids interacted with each other by playing, and learned naturally peer-to-peer (as opposed to institutionalized teaching, which is one-to-many)

- contrary to popular opinion, the laptop does not have a hand crank (it would wear down too fast if it had one); however, the laptop can be powered by a pull string that reacts to the puller's strength and powers the device accordingly

- the 2 rabbit ears are used for wireless; the laptop can speak 802.11s, a new protocol that can be used for fully meshed networking; as soon as one laptop is connected to the internet, all the other ones in its mesh will be connected too

- the CPU is an AMD Geode at 366 MHz (not 400 or 500, actually 366)

- no hard drive, uses 512 MB of flash storage

- OS is a stripped-down version of Fedora

- runs Python wherever it can (including the init boot daemon); some exceptions are the X.org windowing system, the mDNS daemon, and the bus communication; pretty much all other user-level software, including the file system, is written in Python

- the laptop has a 'show source' button which obviously shows Python source code that can be edited, etc.

- no adult has ever been able to open the laptop in less than 2 minutes

- no child has ever needed more than 30 seconds to open the laptop

- two fortunate souls got an OLPC XO laptop today: Guido (as the creator of Python), and a guy who was able to recognize a very complicated formula that Ivan showed on a slide (the BBP formula for computing the n-th decimal place of pi); the guy needed approx. 1 minute to open the laptop; Guido's was already open, in a sign of respect I guess

- OLPC needs good Python developers; if you're interested, check out dev.laptop.org

Saturday, February 17, 2007

Wikipatterns

"Any grassroots, or bottom-up, strategy is the best place to start since the success of a wiki depends on building active, sustainable participation and this only happens when people see that the software is simple enough to immediately be useful, and meets their needs without requiring them to spend lots of extra time.

A good first step is to identify a group or department who would likely benefit the most from using a wiki, and whose people are open to trying new tools. If you're looking to expand wiki use in another group, look for the thought leader in the group - someone who is very forward thinking, respected by peers, and willing to Champion a new idea and get others around them involved."

Seems like a very good complement to a book I read recently: "Fearless Change: Patterns for Introducing New Ideas" by Mary Lynn Manns and Linda Rising.

Friday, February 16, 2007

Anybody doing LDom on Solaris Sparc?

LDom seems like a cool way to partition some big Solaris Sparc boxes, if you have them. I wonder if the logical domains/virtual machines created with LDom can have Ubuntu installed on top of them (because Ubuntu supports Solaris Sparc).

The Buildbot project has a Trac instance as its home

Tuesday, February 13, 2007

Ubuntu not to activate proprietary drivers by default

Friday, February 09, 2007

Cheesecake Service launched

The back-end of the Cheesecake Service talks directly to the PyPI repository using the PyPI API in order to find out about packages newly posted to PyPI. Then the service uses Cheesecake and tries to download, install, and score the package. If your package is not there, it might mean you haven't released a new version after August 10, 2006, date from which we started to score packages. Let us know and we can manually score it so that it appears in the list.

Michał just posted a blog entry on Cheesecake and its Service, so please read it and let us know how we can improve on the various things he describes. We are aware that scoring packages is controversial, and we've been called names before because of it, but as Michał also says, the Cheesecake score is meant to be used as a relative number by creators of Python packages who can try to improve it, and not as an absolute ranking among packages. Think of it as an Apgar score for your software.

Kudos to Michał for his continuing hard work on new Cheesecake features and improvements.

Thursday, February 08, 2007

Internal blogs as project tracking tools

So I had a mini-revelation: an internal blog is a very good tool for tracking time you spend on various projects. Take the example of a hosting company -- it can set up an internal blog and have categories corresponding to various projects/customers; employees can jot down a summary of what they worked on each day, and put it in the appropriate category. After a while, a timeline of work done on particular projects emerges. Because posts are automatically dated, it's easy to see what you were working on 3 weeks or 3 months ago. And each blog post can contain links to more detailed howtos that are kept in a wiki which serves as a knowledge base. Blogs and wikis make entering information a snap, as opposed to more complicated project management/tracking tools. Blogs and wikis are also searchable, so finding information is easy. To me, this is a lean/agile way of keeping track of your work.

Anyway, maybe this is an obvious use of blogs, but to me it's new, and of course I'm going to implement it :-)

Monday, February 05, 2007

New job

Sunday, January 28, 2007

Connecting to people on LinkedIn

"One way to help spread Python would be to have a strong presence of Python developers in various online networks. One that springs to mind is LinkedIn, a job related social networking site.

If we could encourage Python developers to start adding eachother to their LinkedIn network, then we shoud be able to create a well-connected developer network with business and industry contacts. This should benefit everyone -- both people looking for Python developers, and also people looking for work."

So in the past week or so I started to send LinkedIn invitations to people I know, either by having worked with them, or through the various forums, mailing lists and Open Source communities I have been part of. It's amazing how many people we all know, if we think about it.

LinkedIn has several nice features that can help when you're looking for people to hire, or when you're looking for a job. Perhaps the easiest way to find people is to click on 'Advanced search' (the small link next to the main search box) and type something in the Keywords field. Try it with 'python' for example -- you'll see that a lot of people whose blogs are aggregated on Planet Python have a LinkedIn profile. Your next step, if you are a Python developer yourself, is to send invitations to people you want to connect with. If enough of us Pythonistas do this, our networks will become more and more interconnected, to everybody's advantage. And you can replace 'Pythonistas' with 'agilistas', 'rubyistas' or whatever your interest is.

It's also interesting to see how LinkedIn displays the number of the degrees of separation between yourself and people you are searching for. Amazingly enough, that number is usually 2 or 3, if not 1. This makes me think of Malcolm Gladwell's theory about Connectors in 'The Tipping Point', namely that there is a small number of people that have a LOT of connections. If you are connected to one of these Connectors, then all of a sudden you have a huge number of people in your network, and you can potentially benefit by introducing yourself to them as someone only 2 or 3 degrees of separation apart from them. This is true in my own network, where I am only 2 degrees of separation away from Guy Kawasaki for example. Why? Because a long long time ago I accepted a LinkedIn invitation from one Paul Davis, who has 500+ LinkedIn connections.

If I made you curious about LinkedIn, I'd advise you one more time to read Guy Kawasaki's blog post on how to improve your LinkedIn profile.

Speaking of jobs and hiring, if you are a hardcore Python programmer looking for work, especially in the D.C. area, the Zope Corporation is hiring.

Saturday, January 20, 2007

Pybots updates

Email notifications finally started to work too, after I finally figured out I was passing the wrong builder names to the MailNotification class. And we also have RSS feeds available. If you want to be notified of failures from all builders, subscribe to:

http://www.python.org/dev/buildbot/community/all/rss or

http://www.python.org/dev/buildbot/community/all/atom

To be notified of failures from the trunk builders, subscribe to:

http://www.python.org/dev/buildbot/community/trunk/rss or

http://www.python.org/dev/buildbot/community/trunk/atom

To be notified of failures from the 2.5 branch builders, subscribe to:

http://www.python.org/dev/buildbot/community/2.5/rss or

http://www.python.org/dev/buildbot/community/2.5/atom

Matthew Flanagan also added functionality that allows you to subscribe to a feed for a particular builder. For example, to subscribe to the feed for the "x86 Ubuntu Breezy trunk" builder, use this URL:

http://www.python.org/dev/buildbot/community/all/rss?show=x86%20Ubuntu%20Breezy%20trunk

If you are interested in contributing a buildslave to the Pybots project, please send a message to the Pybots mailing list, or to me (grig at gheorghiu dot net), or leave a comment here.

Tuesday, January 09, 2007

Steve Rowe on "Letting test drive the process"

According to Steve Rowe, Microsoft's development and testing process follows these recommended practices. I quote Steve:

"Also, at Microsoft, testing begins from day one. Every product I've ever been involved with at Microsoft has had daily builds from very early on. Every product has also had what we call BVTs (build verfication tests) that are run every day right after the build completes. If any of their tests fail, the product is held until they can be fixed."

Hmmm...I would expect Microsoft to have less problems with their products in this case. But I think a couple of problems that plague Microsoft in particular are backwards compatibility and the sheer amount of hardware/OS/service pack combinations that they need to test.

Speaking of Microsoft and testing, I found The Braidy Tester's blog very informative.

Monday, January 08, 2007

Testing tutorial at PyCon07

Here is the tutorial outline (courtesy of Titus). If you have any suggestions, please leave a comment.

Introduction

* Why test?

* What to test?

* Using testing to boost maintainability of code.

Setting up a project

* Source control management with Subversion.

* A brief introduction to using Trac for project

documentation and ticket management.

* Packaging with distutils

* Packaging with setuptools

* Registering your project with the Python Cheeseshop

* What else is out there? (distributed vs svn, roundup, ...)

Unit testing

* How to think about unit testing

* Using nose to run unit tests

* doctest-style unit tests

* What else is out there? (unittest, py.test, testosterone...)

Functional Web testing with twill

* Writing twill scripts

* Running twill scripts

* Using scotch to record actions

* Using wsgi_intercept to avoid network sockets

* What else is out there? (zope.testbrowser, mechanize, mechanoid)

Using code coverage in conjunction with unit/functional testing

* Basic code coverage with figleaf

* Monitoring code coverage in remote servers

* Combining figleaf code coverage analyses

* What else is out there (coverage)

** BREAK **

Acceptance testing with FitNesse/PyFit

* How FitNesse works

* Writing fixtures

* Running Python fixtures

Web application testing with Selenium

* How Selenium works

* Writing and recording Selenium tests

* Scripting Selenium tests remotely with SeleniumRC

* What else is out there? (Sahi, Watir)

Continuous integration with buildbot

* Introduction to buildbot

* Discussion of concepts, demonstration.

* Integrating tests into buildbot.

* GUI testing in buildbot.

* Using pybots to test your open source project

Conclusion

* Why test, revisited

* Maintainability and testing

Testing Tools Panel at PyCon07: questions needed

I'd be very grateful if people who plan on attending the panel could add more questions or topics of interest either by directly editing the Wiki page, or by leaving a comment here, or by sending me an email at grig at gheorghiu.net. Thanks in advance!

Sunday, January 07, 2007

New Year's resolution

Here are some fragments from January 4th, on "Organizational inertia", which can be applied just as well to any software project ("bitrot" and "goldplating" come to mind):

"All organizations need to know that virtually no program or activity will perform effectively for a long time without modifications and redesign. Eventually every activity becomes obsolete."

"Businessmen are just as sentimental about yesterday as bureaucrats. They are just as likely to respond to the failure of a product or program by doubling the efforts invested in it. But they are, fortunately, unable to indulge freely in their predilections. They stand under an objective discipline, the discipline of the market. They have an objective outside measurement, profitability.And so they are forced to slough off the unsuccessful and unproductive sooner or later."

And how do you measure the efficiency of an organization? By testing, testing, testing:

"All organizations must be capable of change. We need concepts and measurements that give to other kinds of organizations what the market test and profitability yardstick give to business. Those tests and yardsticks will be quite different."

Friday, January 05, 2007

Cheesecake now including PEP8 checks

Here's a sample output of running pep8.py against one of the modules in the Cheesecake project. By default, pep8 reports only the first occurrence of the error or warning. The numbers after the file name represent the line and column where the error/warning occurred:

If you want to see all occurrences, use the --repeat flag.

$ python pep8.py logger.py

logger.py:1:11: E401 multiple imports on one line

logger.py:7:23: W291 trailing whitespace

logger.py:8:5: E301 expected 1 blank line, found 0

logger.py:40:33: W602 deprecated form of raising exception

logger.py:60:1: E302 expected 2 blank lines, found 1

logger.py:114:80: E501 line too long (85 characters)

If you just want to see how many lines in a given file have PEP8-related errors/warnings, use the --statistics flag, along with -qq, which quiets the default output:

You can also pass multiple file and directory names to pep8.py, and it will give you an overall line count when you use the --statistics flag.

$ python pep8.py logger.py --statistics -qq

3 E301 expected 1 blank line, found 0

4 E302 expected 2 blank lines, found 1

1 E401 multiple imports on one line

1 E501 line too long (85 characters)

40 W291 trailing whitespace

1 W602 deprecated form of raising exception

So now cheesecake_index.py includes a check for PEP8 compatibility as part of the 'code kwalitee' index. To compute the PEP8 score, it only looks at types of errors and warnings, not at the line count for each type. It subtracts 1 from the code kwalitee score for each warning type reported by pep8, and 2 for each error type reported. Johann told me he'll try to come up with a scoring scheme within the pep8 module, so when that's ready I'll just use it instead of my ad-hoc one. Kudos to Johann for creating a very useful module.

Thursday, January 04, 2007

Tuesday, January 02, 2007

Martin Fowler's "Mocks Aren't Stubs" -- updated version

Modifying EC2 security groups via AWS Lambda functions

One task that comes up again and again is adding, removing or updating source CIDR blocks in various security groups in an EC2 infrastructur...

-

Here's a good interview question for a tester: how do you define performance/load/stress testing? Many times people use these terms inte...

-

At OpenX we recently completed a large-scale deployment of one of our server farms to Amazon EC2. Here are some lessons learned from that ex...

-

I will give an example of using Selenium to test a Plone site. I will use a default, out-of-the-box installation of Plone, with no customiza...