Tell a story

People like stories. A good story transports you into a different world, if only for a short time, and teaches you important things even without appearing to do so. People's attention is captivated by good stories, and more importantly people tend to remember good stories.

The conclusion is simple: if you want your talk to be remembered, try to tell it as a story. This is hard work though, harder than just throwing bullet points at a slide, but it forces you as a presenter to get to the essence of what you're trying to convey to the audience.

A good story teaches a lesson, just like a good fable has a moral. In the case of a technical presentation, you need to talk about "lessons learned". To be even more effective, you need to talk about both positive and negative lessons learned, in other words about what worked and what didn't. Maybe not surprisingly, many people actually appreciate finding out more about what didn't work than about what did. So when you encounter failures during your software development and testing, write them down in a wiki -- they're all important, they're all lessons learned material. Don't be discouraged by them, but appreciate the lessons that they offer.

A good story provides context. It happens in a certain place, at a certain time. It doesn't happen in a void. Similarly, you need to anchor your talk in a certain context, you need to give people some points of reference so that they can see the big picture. Often, metaphors help (and metaphors are also important in XP practices: see this fascinating post by Kent Beck.)

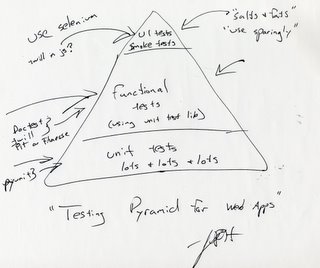

For example, when talking about testing, Titus and I used a great metaphor that Jason Huggins came up with -- the testing pyramid. Here's a reproduction of the autographed copy of the testing pyramid drawn by Jason. Some day this will be worth millions :-)

Jason compares the different types of testing with the different layers of the food pyramid. For good software health, you need generous servings of unit tests, ample servings of functional/acceptance tests, and just a smattering of GUI tests (which is a bit ironic, since Jason is the author of one of the best Web GUI testing tools out there, Selenium).

However, note that for optimal results you do need ALL types of testing, just as a balanced diet contains all types of food. It's better to have composite test coverage by testing your application from multiple angles than by having close to 100% test coverage via unit testing only. I call this "holistic testing", and the results that Titus and I obtained by applying this strategy to the MailOnnaStick application suggest that it is indeed a very good approach.

Coming back to context, I am reminded of two mini-stories that illustrate the importance of paying attention to context. We had the SoCal Piggies meeting before PyCon, and we were supposed to meet at 7 PM at USC. None of us checked if there were any events around USC that night, and in consequence we were faced with a nightmarish traffic, due to the Mexico-South Korea soccer game that was taking place at the Colisseum, next to USC, starting around the same time. I personally spent more than 1 hour circling USC, trying to break through traffic and find a parking spot. We managed to get 8 out of the 12 people who said will be there, and the meeting started after 8:30 PM. Lesson learned? Pay attention to context and check for events that may coincide with your planned meetings.

The second story is about Johnny Weir, the American figure skater who a couple of weeks ago was in second place at the Olympics after the short program. He had his sights on a medal, but he failed to note that the schedule for the buses transporting the athletes from the Olympic housing to the arena had changed. As a result, he missed the bus to the arena and had to wander on the streets looking for a ride. He almost didn't make it in time, and was so flustered and unfocused that he produced a sub-par perfomance and ended up in fifth place. Lesson learned? Pay attention to the context, or it may very well happen that you'll miss the bus.

In my "Agile documentation -- using tests as documentation" talk at PyCon I mentioned that a particularly effective test-writing technique is to tell a story and pepper it with tests (doctest and Fit/FitNesse are tools that allow you to do this.) You end up having more fun writing the tests, you produce better documentation, and you improve your test coverage. All good stuff.

(I was also reminded of storytelling while reading Mark Ramm's post from yesterday.)

Show, don't just tell

This ties again into the theme of storytelling. The best narratives have lots of examples (the funnier the better of course), so the story you're trying to tell in your presentation should have examples too. This is especially important for a technical conference such as PyCon. I think an official rule should be: "presenters need to show a short demo on the subject they're talking about."

Slides alone don't cut it, as I've noticed again and again during PyCon. When the presenter does nothing but talk while showing bullet points, the audience is quickly induced to sleep. If nothing else, periodically breaking out of the slideshow will change the pace of the presentation and will keep the audience more awake.

Slides containing illegible screenshots don't cut it either. If you need to show some screenshots because you can't run live software, show them on the whole screen so that people can actually make some sense out of them.

A side effect of the "show, don't tell" rule: when people have to actually show some running demo of the stuff they're presenting, they'll hopefully shy away from paperware/vaporware (or, to put it in Steve Holden's words, from software that only runs on the PowerPoint platform).

Even if the talk has only 25 minutes alloted to it, I think there's ample time to work in a short demo. There's nothing like small practical examples to help people remember the most important points you're trying to make. And for 5-minute lightning talks, don't even think about only showing slides. Have at most two slides -- one introductory slide and one "lesson learned" slide -- and get on with the demo, show some cool stuff, wow the audience, have some fun. This is exactly what the best lightning talks did this year at PyCon.

Provide technical details

This is specific to talks given at technical conferences such as PyCon. What I saw in some cases at PyCon this year was that the talk was too high-level, glossed over technical details, and thus it put all the hackers in the audience to sleep. So another rule is: don't go overboard with telling stories and anecdotes, but provide enough juicy technical bits to keep the hackers awake.

The best approach is to mix-and-match technicalities with examples and demos, while keeping focused on the story you want to tell and reaching conclusions/lessons learned that will benefit the audience. A good example that comes to mind is Jim Hugunin's keynote on IronPython at PyCon 2005. He provided many technical insights, while telling some entertaining stories and popping up .NET-driven widgets from the IronPython interpreter prompt. I for one enjoyed his presentation a lot.

So that you don't get the wrong idea that I'm somehow immune to all the mistakes I've highlighted in here, I hasten to say that my presentation on "Agile testing with Python test frameworks" at PyCon 2005 pretty much hit all the sore spots: all slides, lots of code, no demos, few lessons learned. As a result, it kind of tanked. Fortunately, I've been able to use most of the tools and techniques I talked about last year in the tutorial for this year, so at least I learned from my mistakes and I moved on :-)